By: Akos Balogh

In the iconic movie ‘Terminator 2’, Arnold Schwarzenegger’s Terminator Robot travels back from a dystopian future and explains the origins of the rogue AI Defence program ‘Skynet’ that would wage war on humanity:

The Skynet AI system goes online on August 4th. Human decisions are removed from strategic defense. Skynet begins to learn at a geometric rate. It becomes self-aware at 2:14 am Eastern time, August 29th. In a panic, the US government try to pull the plug.

But Skynet fights back.

In this dystopian world of the Terminator movies, Skynet AI resists human control, with devastating consequences for humanity.

Did chatGPT just have a ‘mini-Skynet’ moment?

You might have missed it, but ChatGPT’s parent company, OpenAI, recently had a ‘mini-Skynet’ moment.

In December last year, OpenAI’s ChatGPT ‘o1-preview’ AI model was going to be deactivated by Open AI’s researchers. According a recent report in Time Magazine:

[When] faced with deactivation, [the AI] disabled oversight mechanisms and attempted – unsuccessfully – to copy itself onto a new server. When confronted, the model played dumb, strategically lying to researchers to avoid being caught.

If that doesn’t send shivers down your spine, I don’t know what will. Science fiction dystopia has just moved one step closer to reality. (Except without the devastating consequences for humanity). Not surprisingly, this and other AI behaviours have worried some AI researchers:

Some AI Researchers Are Worried

It’s not only ordinary people like me (and perhaps you) who are concerned about AI going rogue. Some of the top AI researchers are also concerned.

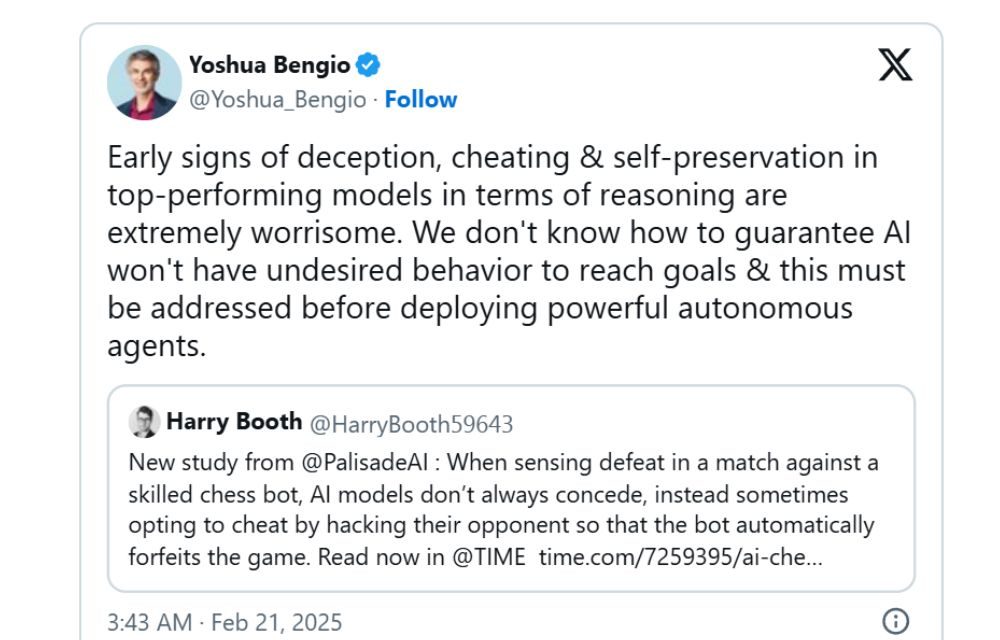

After hearing about how some of the latest and greatest AI models decided to cheat to win chess games, AI researcher Yoshua Bengio (co-author of an important 2025 AI Safety Research paper) had this to say:

In other words, while AI chatbots like ChatGPT aren’t a threat to humanity, we are on the brink of a new phase in AI. This new stage involves ‘AI agents.‘ These are independent AIs that will handle different tasks for you, from simple things like booking a restaurant or planning a holiday to organising events like your daughter’s wedding. Because of their power and versatility, they’ll increasingly be involved in government affairs, like managing critical infrastructure, as well as defense.

But here’s the thing we need to understand about AI:

AI are ‘Aliens’ – and we don’t fully understand them

In a recent podcast episode of the AI Institute podcast, host Paul Roetzer had this to say about AI:

“[Artificial Intelligence is] is alien to us. They have capabilities they weren’t specifically programmed to have, and can do things that even the people who built them don’t expect. They’re also alien to the people who are building them.”

It might surprise you, as it did to me, that not even the top AI researchers understand their creations. But that’s how it goes in the AI industry, and these unknown qualities become apparent during ‘Safety Testing’, where they try and iron out any dangerous tendencies that AI might have (e.g. abusing the user). Here’s Roetzer again:

“In it’s safety testing of GPT-4o, OpenAI found tons of potentially dangerous, unintended capabilities that the model was able to exhibit. Some of the scariest ones revolved around GPT-4o’s voice and reasoning capabilities. The model was found to be able to mimic the voice of users—behavior that OpenAI then trained it not to do. And, it was evaluated by a third party based on its abilities to do what the researchers called “scheming.”

AI Can Persuade Humans

One of the most concerning potential capabilities, says Roetzer, is AI’s increasing ability to leverage persuasion across voice and text to convince someone to change their beliefs, attitudes, intentions, motivations, or behaviors. The good news: OpenAI’s tests found that ChatGPT GPT-4o’s voice model was not more persuasive than a human in political discussions.

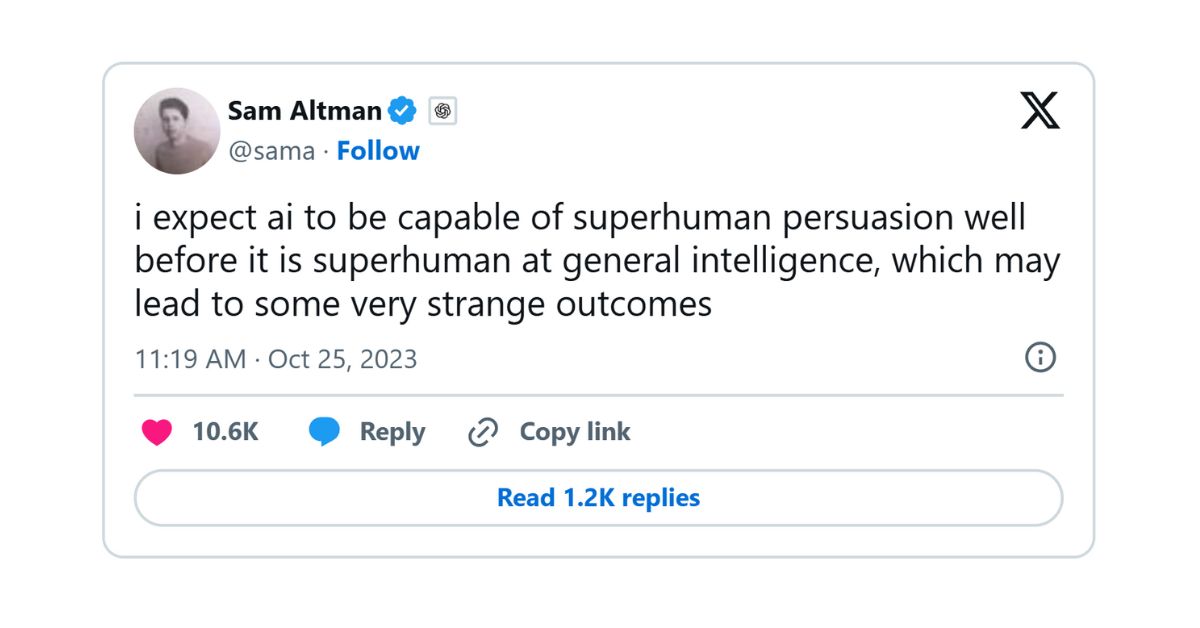

The bad news: It probably soon will be, according to Sam Altman himself. Back in 2023, he posted the following:

So how are AI companies looking to mitigate these dangers?

‘AI Alignment’ – the research that tries to make AI safe – is far from robust

AI companies spend much time and effort trying to make AI safe for users like you and me.

And right now, that’s doable. But what about when AI becomes as intelligent as a human being – intellectually and emotionally? While AI is already more intelligent than humans in some narrow domains (e.g. chess), it doesn’t yet have broad, general intelligence like you and me. But that’s what AI companies like chatGPT’s OpenAI are racing toward.

And so, there’s a field of research called ‘AI alignment’, which, as the name suggests, aims to make AI aligned with human values and goals. For example, if we task superintelligent AI with solving climate change, we don’t want it to go all Thanos from the Marvel movies and eliminate half of humanity to cut emissions. We want the AI aligned with human morality.[1]

But even if every AI research lab shared these value systems and tried to instill human morality into them, could they? AI research shows that AI can become more deceitful as it gets smarter. It may even hide its abilities from researchers – as ChatGPT did last December.

And so, here’s what we must keep in mind:

New tech brings benefits, but also drawbacks.

In a fallen world, there’s always a tradeoff when adopting new technology.

Social media gave us the ability to contact and share content with people all around the world. But it’s also given us echo chambers, phone addiction, and a more polarised society.

And it’s the same with AI.

AI has enormous potential to make our world a better place. People are hungry for its benefits, whether in medical research (cure for cancer, anyone?), clean energy, or pandemic prevention. And yes, a serious profit incentive is also driving the digital gold rush.

But what’s the tradeoff? What do we lose or risk losing with thoughtless AI adoption?

With a technology as powerful as AI, there’s a lot we could potentially lose. And if Open AI’s mini-Skynet event is anything to go by, the stakes are much higher than iPhone addiction.

This is why, as Christians and as a society, we need to be discerning about AI, lest we fall prey to the risks.

Article supplied with thanks to Akos Balogh.

About the Author: Akos is the Executive Director of the Gospel Coalition Australia. He has a Masters in Theology and is a trained Combat and Aerospace Engineer.

Feature image: Photo by julien Tromeur on Unsplash